Design lead (Myself)

UX designer

Project lead

3 Engineer

Conceptualization

Design

Usability testing

Dev handoff

2 months

Accuracy wasn’t only a machine learning problem it was a UX problem of grounding, expectation, and trust. My role was to design interaction patterns, reference systems, and feedback loops that helped the AI remain accurate and trustworthy across diverse data environments.

The knowledge base fared well, increasing the AI accuracy and the ability to refer from previous answers and context.

User-flagged inaccuracies dropped after introducing metadata and glossary.

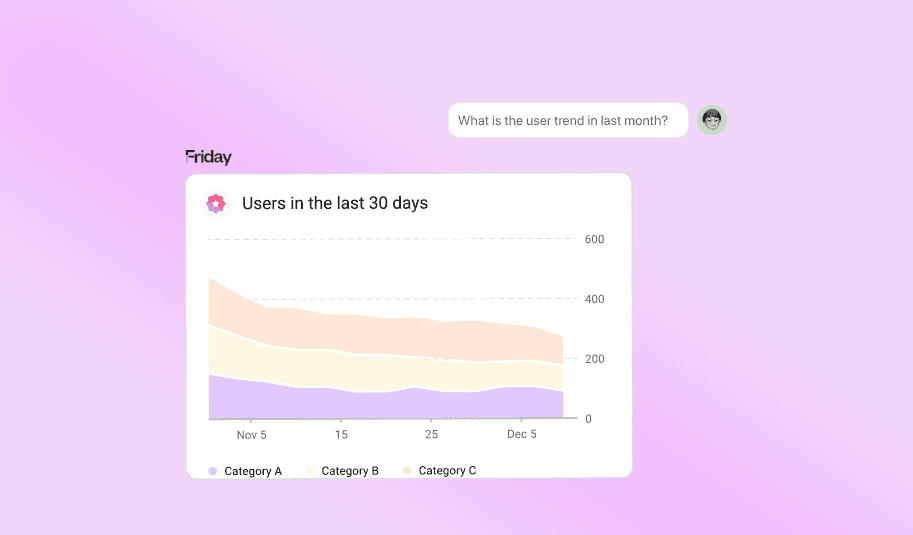

Friday is a AI-powered analytics. A agentic solution for data analysts which empowers you to delve deeper into your analysis, providing detailed insights and a thorough understanding of your data. AI-powered chat with your data in natural language.

While the AI excelled on its training database, its performance degraded on unfamiliar datasets.

Before diving into design, I asked: What does “accuracy” actually mean in this context?

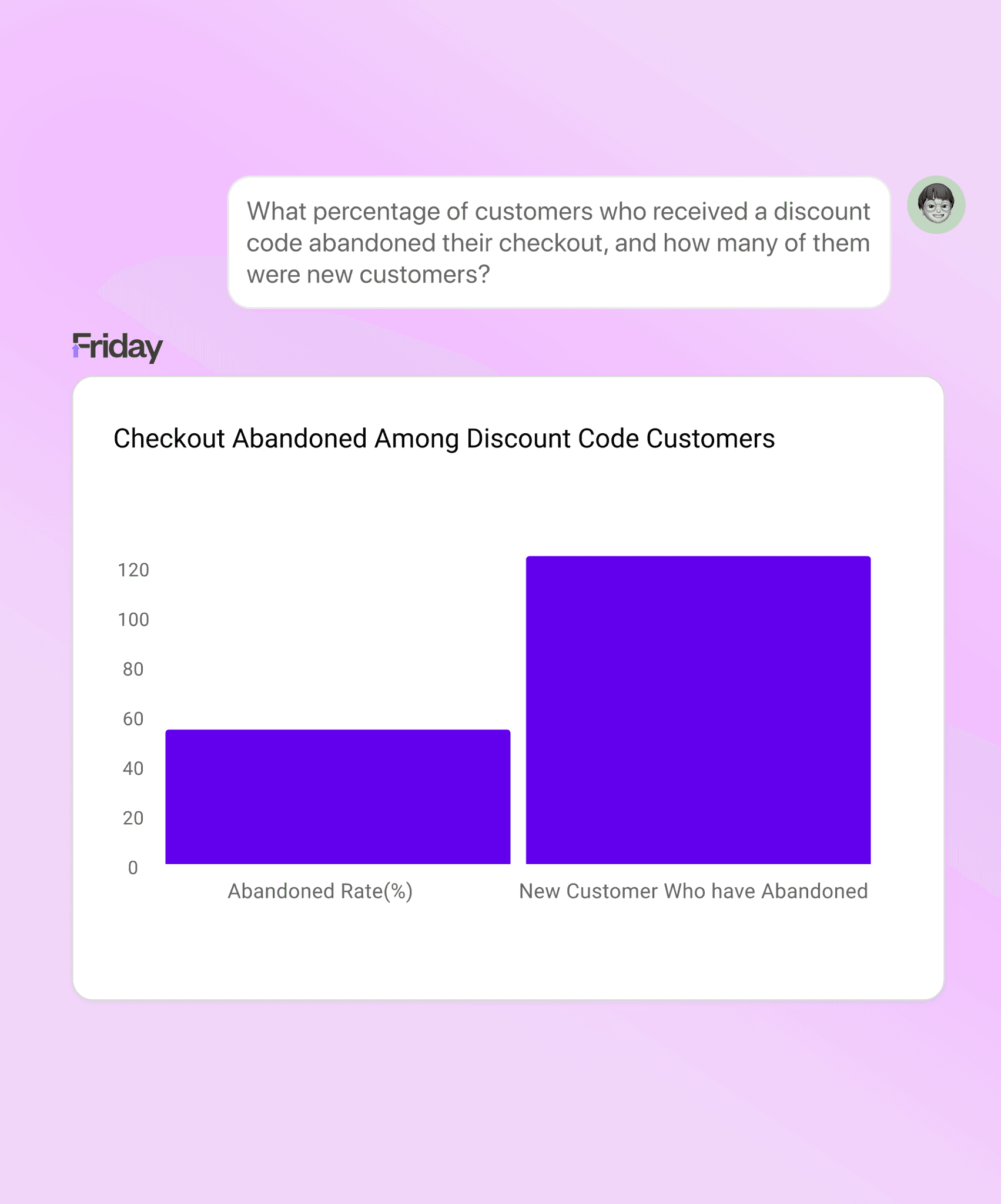

-Ambiguity in natural language queries (“sales” could mean orders, revenue, or bookings).

-Schema inconsistencies across databases (multiple columns named revenue, rev_usd, net_revenue).

-Absence of a shared business glossary.

-LLM hallucinations when reference points were weak.

-User input → Intent interpretation → Mapping terms to schema → Generating SQL → Retrieving results.

-Any misstep (especially in intent-to-schema mapping) led to inaccurate answers.

-Understand where answers come from (grounding + explainability).

-Can quickly verify or correct outputs when needed.

-Build trust over time as the AI learns from human feedback.

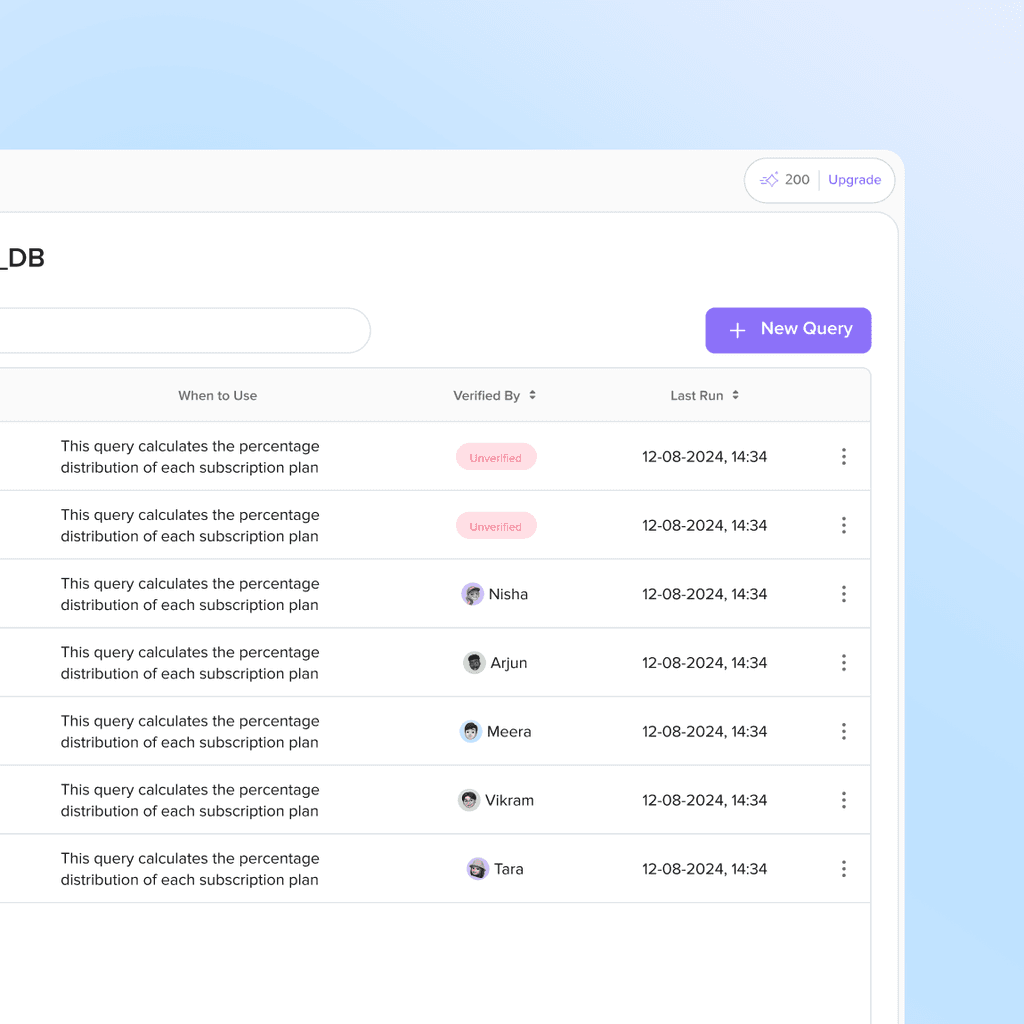

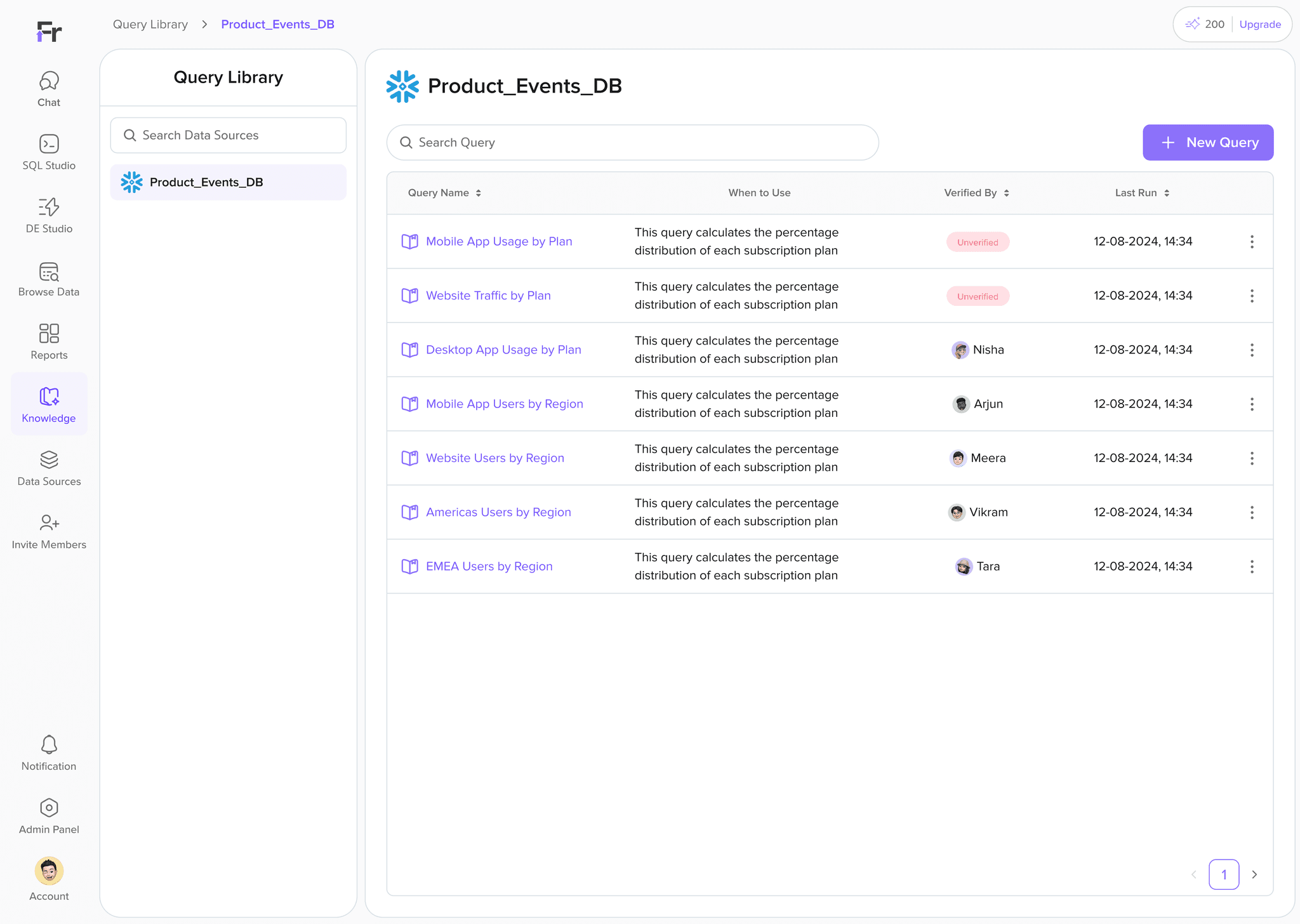

Saved Queries

Coming soon

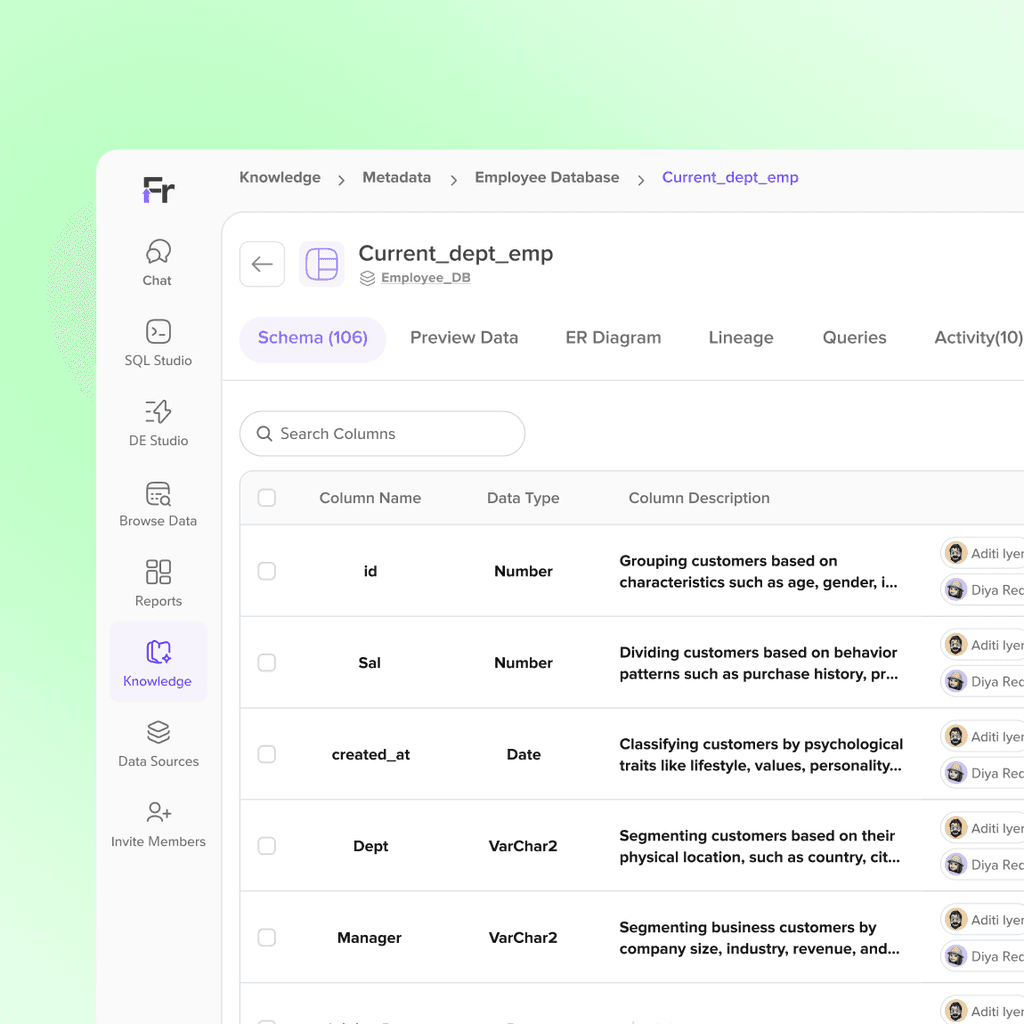

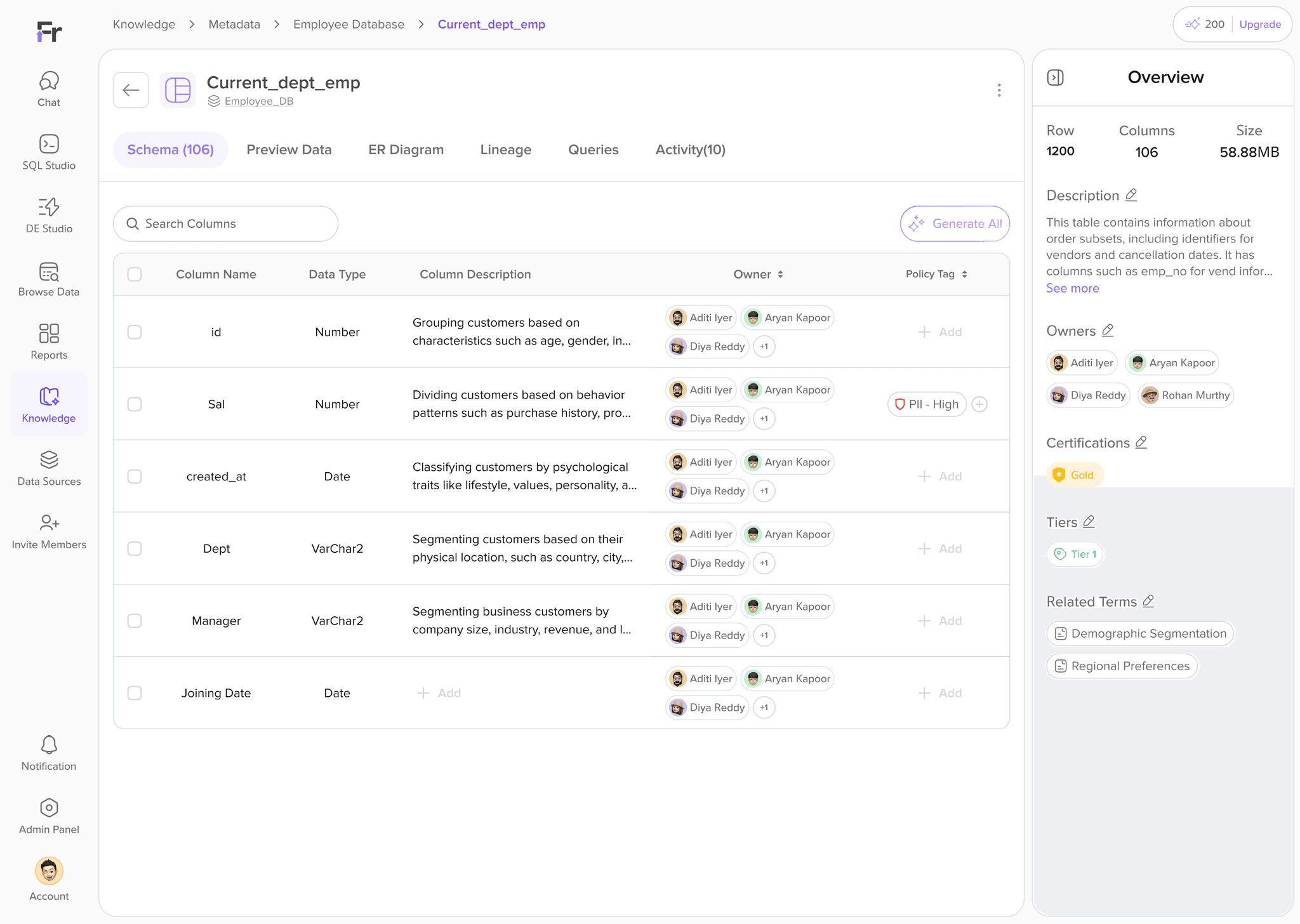

Metadata

Coming soon

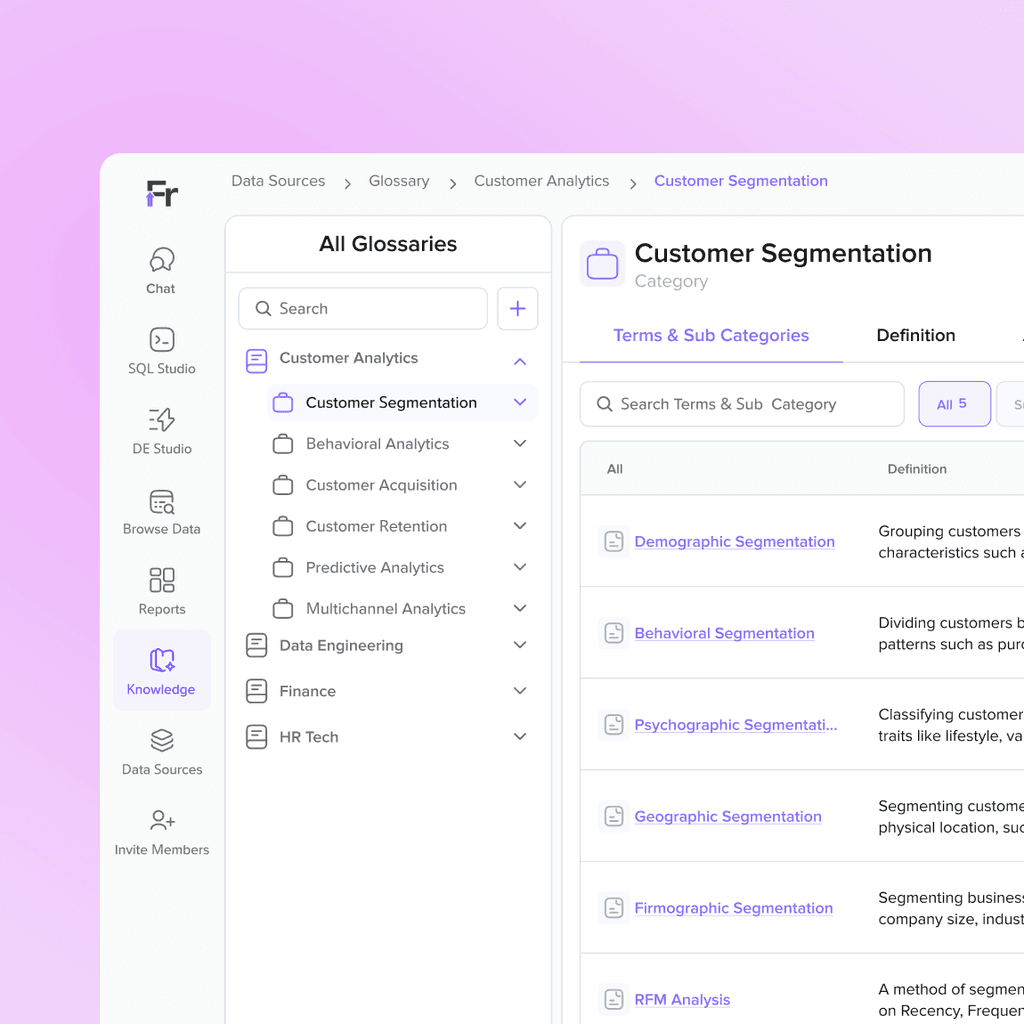

Business Glossary

Coming soon

While the AI excelled on its training database, its performance degraded on unfamiliar datasets.

First we designed a feedback mechanism where AI outputs could either be:

Sent for verification by an analyst, or Saved as a “verified query” for future use.

Benefits:

-Built a knowledge loop AI learned from verified queries.

-Reduced repeated mistakes; the AI reused trusted queries.

-Analysts trusted the system more because they controlled the verification step.

Save query reduced the response time by 20% but only when exact same or similar query was asked.

It reduced the token consumption by 100% on same query/similar questions which directly effected the cost per query which also reduced by 50% using saved query.

But it the issue with query on unknown database persisted.

Next, we designed metadata for each table and column, giving the AI explicit context of the datasource and entities.What worked: Human-authored metadata dramatically improved AI query accuracy.

What didn’t: Manual metadata creation was time-consuming and unsustainable.

Pivot: We explored AI-generated metadata.

Initial tests: human metadata outperformed AI-generated.

Later improvements: AI-generated metadata reached a usable quality level, making it a scalable way to bootstrap context.

Metadata has improved the quality and accuracy of the answers on known and unknown database.

But it fails to store any specific business terms or formulas which generally used by business users

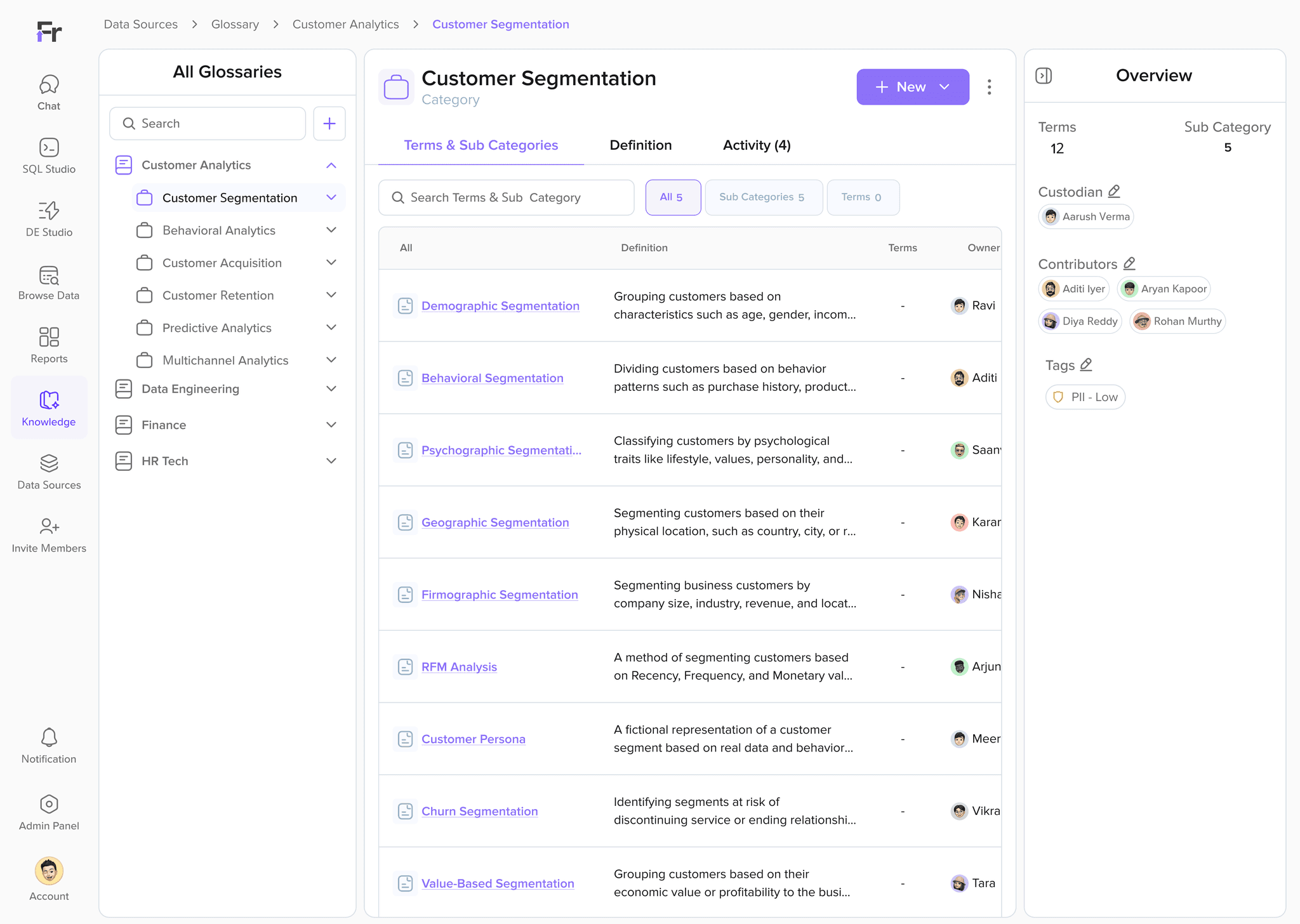

Finally, we designed business glossary, a structured knowledge base of company-approved terms.

Glossary entries included:

-Definitions

-Synonyms & acronyms

-Related terms

-Associated columns/tables

Why it mattered:

-Provided rich semantic grounding for the AI.

-Ensured alignment with business definitions, not just raw data.

-Reduced ambiguity across departments.

Business Glossary covered the storing of business terms and formula.

Glossary alone cannot solve the accuracy as term definition is different from a column description

After evaluating all the possible ways we can use to improve the accuracy. As each solution had their pros and cons and we realized that we cannot implement just one solution and expect to solve the accuracy itself but combining all of them as a Knowledege base could solve the problem. Therefore we finalized to implement all the 3 solution in stages.

This project involved a ton of work, exploration, and collaboration with stakeholders. It’s hard to sum up everything I’ve learned, but if I had to narrow it down, here’s what stands out!

Working on this project was both incredibly fun and pretty stressful, as I was diving into a domain that was completely new to me. The upside, though, was that I came in with a fresh perspective free from any baised worflow of AI Engieers.

During this project, I explored over 30 AI platforms, and through that process, I realized just how quickly AI is evolving. It’s fascinating to see that AI essentially mirrors the human brain, it learns the way we learn, by observing, adapting, and improving with experience.